In life and in work, asking great questions can reshape your thinking and open doors to important answers. LLMs have made it amazingly easy and rewarding to ask questions about most things, but asking great data-backed questions about our businesses is still difficult - it requires knowing what data exists and where, writing and running SQL, fiddling with complex query builders, or asking an engineer or data analyst to do it.

At the last Maven team hackathon I built a data exploration tool that makes it easy to ask natural language questions about our business and get exact data as answers. It operates over almost all the data our company collects, and it’s being used by over half our team every week across all functions, from the support team to the CEO.

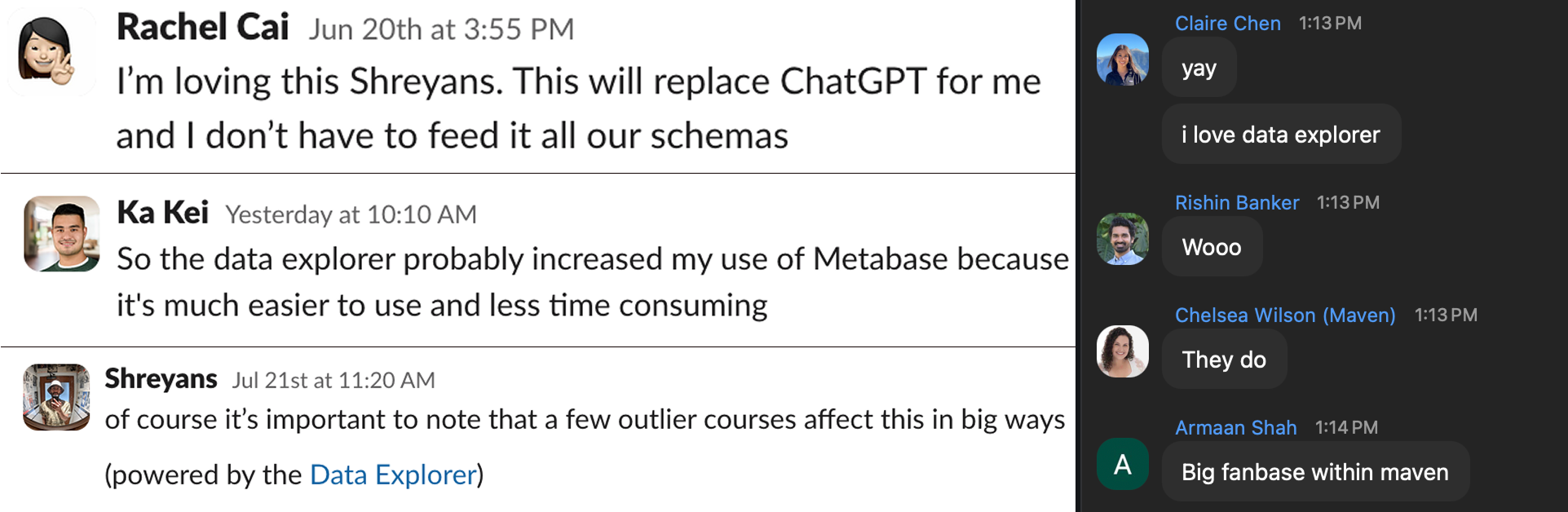

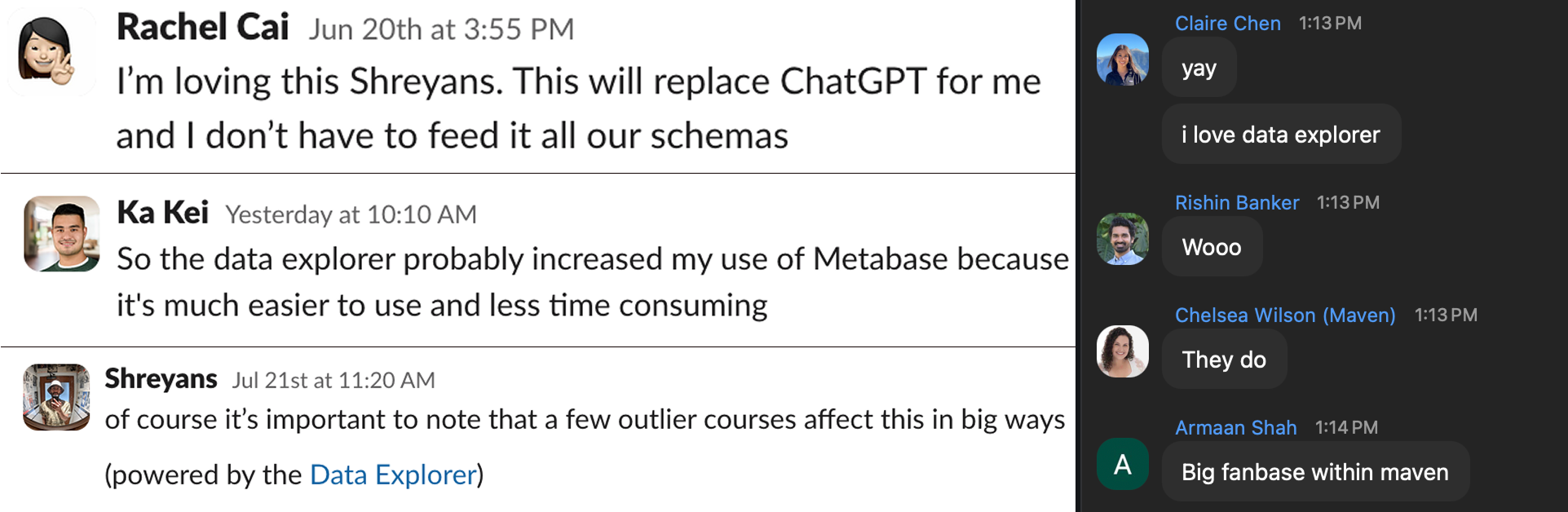

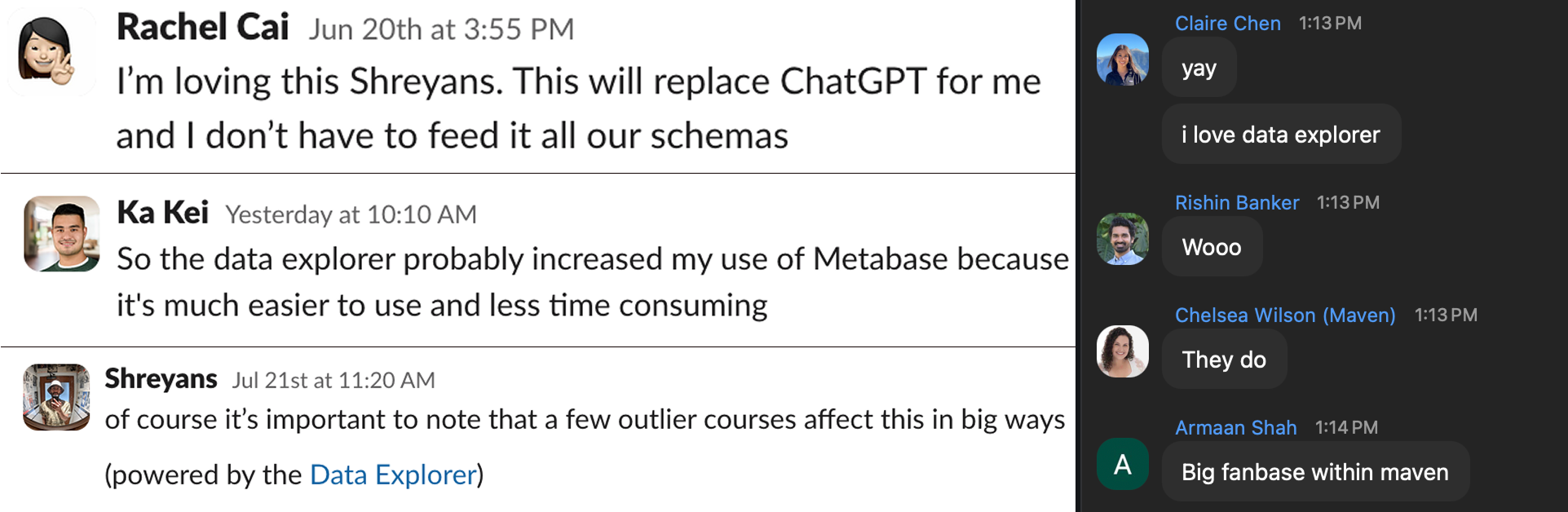

They seem to love it.

Here’s how and why I built it.

Problem: Even good data pipelines have limitations

Maven collects data from many sources: user actions in

Amplitude, email activity in

Customer.io, and application state in our Postgres database. This data is combined via

Segment and extended through

dbt transformations in

Snowflake. On top of this, we have

Metabase for querying, charting, and sharing cuts with the team.

This is a reasonably thoughtful data pipeline for a 20 person company, and Metabase’s query builder is easy enough to use for simple queries, but there are some challenges:

To query data you need to know that it exists, and what it’s called. We add new tables all the time and the names might not match the feature’s external name. Some of the data may live as columns, other data might live in JSON fields and the contents may be opaque to database tools. It’s hard for a casual analytics user to keep up and to remember enough details to ask all their questions.

Many interesting questions require complex queries which can get gnarly even with visual query builders. For example, I wanted to know which courses engineers had taken in the last 3 months. This required a multi-part query: first pattern matching over user job titles to find engineer users, separately joining courses with tags and enrollments to find engineering course users, and two kinds of joins to bring the pieces together. Simple question, complex query to generate.

Because of these challenges, people start their analysis by either taking an existing query and modifying it, or by posting questions in the #analytics channel in Slack and waiting for a PM or an engineer to help them answer it, which is a drag for everyone involved.

Solution: Thankfully LLMs are great at writing SQL

LLMs can help here because they’re great at two important things:

First, LLMs are excellent at writing well-structured SQL. SQL is inherently structured with a small, well-defined grammar. Natural language queries are declarative like SQL. And there is a ton of training data for SQL and responses are easy to verify. Even the engineers on the team that are very comfortable with SQL use ChatGPT to help them write the more complex queries.

Second, LLMs can accurately handle very large context windows. Hallucinations still happen but there is

active effort and

good progress in increasing factuality in LLM responses. And as the context windows of LLMs have grown from 8k to millions of tokens, work has been done to measure and improve on the “

needle-in-a-haystack” test. Gemini 2.5 Pro

claims 100% recall from a context of 530k tokens, and 99.7% recall for a million tokens!

So if we can give an LLM a complete description of our data model - the tables, the rows, the names of fields in JSON columns - it wouldn’t be able to answer our question directly, but it might figure out how to write accurate queries to answer these questions. This turns out to work quite well.

Solution: The Data Explorer, step-by-step

What I built was a “chat with an LLM” page on our admin site backed by an API endpoint that sends the user’s query and a description of our data model to Gemini 2.5, gets back SQL with an explanation, and runs that SQL in our Snowflake warehouse through the Metabase interface. There are no microservices here - both the client and server sides were built into our main apps.

In more detail:

We first construct our context to contain a description of the data model. This context is a giant text blob containing four components:

Our Postgres tables are defined through SQLAlchemy in one 3k line `models.py` file so that goes in first.

We have Pydantic schemas for all JSONB columns, so we include our entire `schemas.py` file next. If we were short on context window space, we’d cut out the irrelevant schemas, but to start with I include everything.

dbt models are defined as `.sql` files in a separate repo, so we put those in as well.

We have some custom models defined in Metabase, so we download a JSON representation of those and put them in last.

Each of these components is a different format, but the LLM understands each one with no additional explanation. And since this feature is built into our primary application, it has direct access to the latest table definitions and is always up to date with our data model.

We then prompt Gemini with this context, task instructions, and the user’s query. The query comes in via a chat interface that was largely built by Cursor. We prompt our LLM of choice via

litellm’s OpenAI-compatible chat completions API with a `system` message containing the data model context and a `user` message with the user’s query.

This is the exact system prompt we use (notice the spots where the schemas above get inserted). We add specific instructions to handle edge cases, formatting specifications, and define application specific terms. We use Gemini because it has the largest context window but most of the latest LLMs should work.

When an answer comes back from the LLM, we extract the SQL and turn it into a Metabase link using Metabase’s undocumented

question URL API, in which we encode the entire SQL query as a base64 string. We return this Metabase link to the front end along with the LLM’s explanation of the query. The user can then go to that Metabase link to run the query and see the results, or can keep chatting with the tool to modify the query. We could directly execute the SQL we get back from the LLM, but we don’t do this! Even with the safety of a read-only replica, we’d have to consider pagination, the UI for displaying data, and a number of other concerns better left to Metabase or a proper querying tool.

The work wasn’t done until people were using the tool, so the final step was to market it internally. I started pasting the exact questions posted in our Slack #analytics channel into the data explorer and replying with a link to the chat. When I’d ask an interesting question or get a surprising query, I’d share the result with the team and credit the Data Explorer. With regular reminders, usage crept up.

Challenges and lessons

A couple of months in, most of the team has tried the tool and around a third of the team uses it every week. The heaviest user is one of our PMs, who says it has replaced a lot of his direct use of Metabase. I’m the second heaviest user, and it has greatly increased the number of data questions I ask every week. I use it to create csvs for various reasons, and I satisfy my curiosity on many passing questions. After us, the support and instructor success teams use it most often, usage which would have certainly required assistance from the engineering team before this tool. Most of the team has used it for at least one one-off question.

In this time, I’ve noticed a few failure modes. First, people expect more from the tool than it can do, and the edges are non-obvious. They might expect it to read a URL (a webpage, a previous query) and answer questions based on it. Or they might expect us to have data that we don’t collect or isn’t in Snowflake yet. Some failures point to future improvements. Second, the SQL can certainly be subtly wrong, primarily via hallucinated field names, and it’s important to read the query to verify it. Finally, infrequent users forget to use the tool and go back to asking questions in #analytics that the tool can answer. Adoption in a constant effort - a truth that applies to many product features.

There are unexpected positives too: because the tool sees the full data model, it may find paths to answering a question that I may not have taken. For example, I asked which of our instructors were in Europe and it used an `instructor_timezone` field that I had forgotten we collected. And assuming one reads the SQL that is generated (I do, but don’t expect most would), it’s also possible to learn SQL techniques like

CTEs, and rank windows from its output. The most important positive of course is that because asking questions is now cheap, I ask way more questions.

All this was possible because of two strong foundations: We’ve insisted on type checking across our Python code base, which means that our ‘schemaless’ JSON is also typed and well defined when used in the application. And our prior data work to connect different sources using Segment and dbt into Snowflake allows us to write and run complex queries in Metabase. That said, the core of the tool would be useful with just a Postgres db and `psql` in read-only mode to run the generated queries.

If your teammates would value a way to ask and answer more data-backed questions and you have a few days to spare, consider building this. If you’ve got questions about it, message me at

data@shreyans.org.

I’m Shreyans, co-founder and CTO of

Maven, the missing grad school for tech, and home to some incredible engineering/AI courses. I love building useful products with small, excellent teams, and I think we’ve got a pretty great one here at Maven.

We’re hiring senior/staff engineers to work on our tight-knit, remote team.