Agent Engineering Bootcamp: Developers Edition

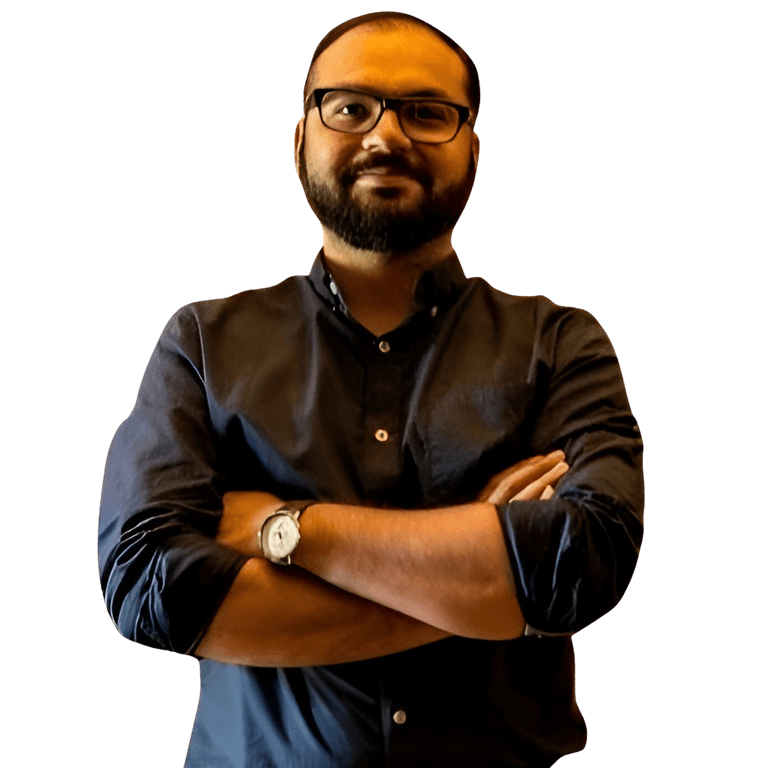

Hamza Farooq

Founder | Ex-Google | Prof UCLA & UMN

Zain Hasan

Staff AI @Together AI | Founder

The Agent Technical Course: Build and Deploy Production-Grade Gen AI Products

A 7-week technical deep dive for AI builders ready to design agent systems that reason, route, and adapt.

7 instructor-led sessions · 7 office hours · 1 Demo Day

What You'll Build1. Agentic RAG with Routers Master stateful RAG with intelligent routing, reflection, memory, and multi-hop search strategies beyond naive cosine similarity.

2. Hosting & Quantizing LLMs: Deploy production-grade models using GPTQ/GGUF quantization via Ollama (local) and RunPod (cloud) with FastAPI and auto-scaling.

3. Semantic Caching: Build cache layers from scratch using vector proximity and feedback loops to reduce latency and costs.

4. Knowledge Graphs Implement graph-based memory with text-to-Cypher generation using Neo4j/Memgraph and DSPy for structured reasoning.

5. ReAct Agents Create Reason+Act pipelines in Python and n8n for human-in-the-loop workflows with visual orchestration.

6. Production Deployment Ship multi-agent systems using Google's ADK, MCP, A2A collaboration, Llama Guard safety rails, and GCP monitoring.

Prerequisites: RAG/LLM experience, Python, APIs, cloud basics

👉 This course is for builders who ship real AI.

What you’ll learn

Master Advanced Techniques for Building and Optimizing Agentic RAG Systems and Multi-Agent Workflows — Designed for Builders

Design and deploy intelligent retrieval systems that reason, route queries, and adapt across multi-turn conversations—far beyond naive RAG

Framework: Implement stateful RAG architectures with routers, reflection loops, and multi-hop reasoning strategies

Hands-on: Build custom routing logic that knows when cosine similarity fails and switches between retrieval strategies

Host, quantize, and serve production LLMs locally and in the cloud with cost-efficiency and low latency

Technical deep-dive: Master quantization techniques (GPTQ, GGUF, QLoRA) to reduce model size by 4-8x without sacrificing performance

Infrastructure: Deploy models using Ollama (local), RunPod (cloud), and FastAPI with auto-scaling capabilities

Outcome: Build intelligent caching layers that recognize similar queries, avoid redundant LLM calls, and improve over time.

From scratch: Code semantic distance functions using vector embeddings and proximity thresholds

Architecture: Discussion on cache hit/miss systems with reranking and feedback loops that learn from usage patterns

Outcome: Move beyond flat retrieval to structured reasoning by building graph-based memory with natural language to Cypher query generation

Modeling: Design graph schemas for agent memory, extracting entities and relationships from unstructured text

Tools: Implement with Neo4j or Memgraph and connect to RAG pipelines for context-aware graph traversal

Outcome: Deploy coordinated multi-agent workflows with agent-to-agent communication, human-in-the-loop patterns, and production guardrails.

ReAct paradigm: Build modular Reason+Act pipelines with tool use, planning, and reflection in both Python and n8n

ADK & MCP: Combine Google's Agent Development Kit with Modular Cognitive Planning for enterprise-grade orchestration

Who this course is for

Machine Learning Engineer exploring different techniques to scale LLM solutions

Researcher, who would like to delve in to various aspects of open-source LLMs

Software Engineer, looking to learn how to integrate AI into their products

What's included

Live sessions

Learn directly from Hamza Farooq & Zain Hasan in a real-time, interactive format.

Lifetime access

Go back to course content and recordings whenever you need to.

Community of peers

Stay accountable and share insights with like-minded professionals.

Certificate of completion

Share your new skills with your employer or on LinkedIn.

Maven Guarantee

This course is backed by the Maven Guarantee. Students are eligible for a full refund through the second week of the course.

Course syllabus

11 live sessions • 43 lessons • 6 projects

Week 1

Apr

11

Session 1: World of Agents & Quantization

Optimizing and Deploying Large Language Models

Recordings on Transformers

Week 2

Apr

15

Office Hours

Apr

18

Session 2: KV Caching and Speculative Decoding

KV Caching and Speculative Decoding

Free resource

Enterprise Knowledge Management and Multi-Agent Architecture

Streamlining Knowledge Access with Enterprise RAG

RAG breaks down data silos, enabling seamless access to Enterprise Knowledge for smarter, faster decision-making.

Empowering Decision-Making with AI Agents

Multi-agent systems automate workflows, enhance collaboration, and provide real-time support for complex tasks.

Explore Practical Applications of AI Agents

Get hands-on insights into building AI agents from scratch, understanding their architecture, and deploying them.

Schedule

Live sessions

2-3 hrs / week

Sat, Apr 11

4:00 PM—6:00 PM (UTC)

Wed, Apr 15

4:00 PM—4:45 PM (UTC)

Sat, Apr 18

4:00 PM—6:00 PM (UTC)

Projects

1-3 hrs / week

Async content

1-3 hrs / week

Frequently asked questions

$2,500

USD