Beyond Evals: Designing Improvement Flywheels for AI Products

Aishwarya Naresh Reganti

AI Founder & Advisor to F500s | Ex-AWS

Kiriti Badam

Applied AI @ OpenAI | Ex-Google

.png&w=768&q=75)

.png&w=768&q=75)

Move beyond one-time evals to continuous improvement flywheels for AI products

Most teams building AI products today know about evals and LLM judges, but they’re deeply confused about how to actually turn them into actionable improvement signals post production. Just throwing in LLM judges won’t magically fix your product. It won’t.

This course shows how to use offline evals, online evals, and production monitoring to drive continuous improvement, based on real production experience.

We believe that going forward, a thoughtfully designed data flywheel will be the moat for AI applications. This course shows you how to build it.

This course is for people who’ve already built AI systems and are stuck with evals that are noisy, expensive, or useless

If you need something more foundational, check out our top-rated flagship enterprise AI course taken by over 1500+ builders and leaders.

For questions or bulk enrollments please email problemfirst.ai@gmail.com

We follow a flipped-classroom format with async lecture content and meet twice a week for office hour style sessions to optimize two-way/interactive live time.

What you’ll learn

Evals aren't your product moat. A continuous improvement flywheel is.

Learn why evals is often reduced to LLM judges, and why that breaks real AI products.

Understand evaluation as a lifecycle practice, not a one time pre deployment check.

Build an intuition for continuously improving AI products through a connected evals and monitoring loop.

Learn how to build reference datasets before your product ships.

Identify key stakeholders (product, engineering, SMEs) who can help build an accurate behaviour estimate

Design improvement setups that evolve as the product evolves, including when to add or retire evals.

Understand how evaluation strategies differ across RAG systems, tool calling workflows, and multi turn systems.

Learn which categories of evals matter for different system behaviors and failure modes.

Learn when to use LLM judges, when not to, and how to avoid overly biasing on them.

Learn which production signals matter, both explicit and implicit, and how to use them for improvement.

Understand how behavior drifts in production and how monitoring helps surface it early.

Close the loop by feeding production signals back into evals and datasets, and learn how this data flywheel becomes a product moat.

Who this course is for

Software/AI Engineers, Strategists, Data Professionals, Solution Architects and Consultants looking for systematic evaluation practices

Business Leaders and Product Managers seeking to gain the technical understanding of AI Product Lifecycle and intentional iteration

Founders & teams stuck at systematic evaluations and monitoring, unsure how to turn signals into real product improvements.

Prerequisites

A good understanding of AI system design

You should have built small AI applications before and be familiar with concepts like RAG, MCP, and tool use.

Working knowledge of Python/Coding (Optional)

The course includes optional Python assignments, you're free to use coding agents, but basic coding skills are recommended

Course syllabus

Week 1

Mar

14

Welcome Lecture

Week 2

Week 1: Foundations of AI Evaluation & Monitoring

Mar

17

US/UK/EU Friendly Office Hours (Week 1)

Mar

20

APAC/US Friendly Office Hours (Week 1)

Schedule

Live sessions

2 hrs / week

Sat, Mar 14

4:00 PM—5:00 PM (UTC)

Tue, Mar 17

4:00 PM—5:00 PM (UTC)

Fri, Mar 20

1:00 AM—2:00 AM (UTC)

Projects

2 hrs / week

Async content

2 hrs / week

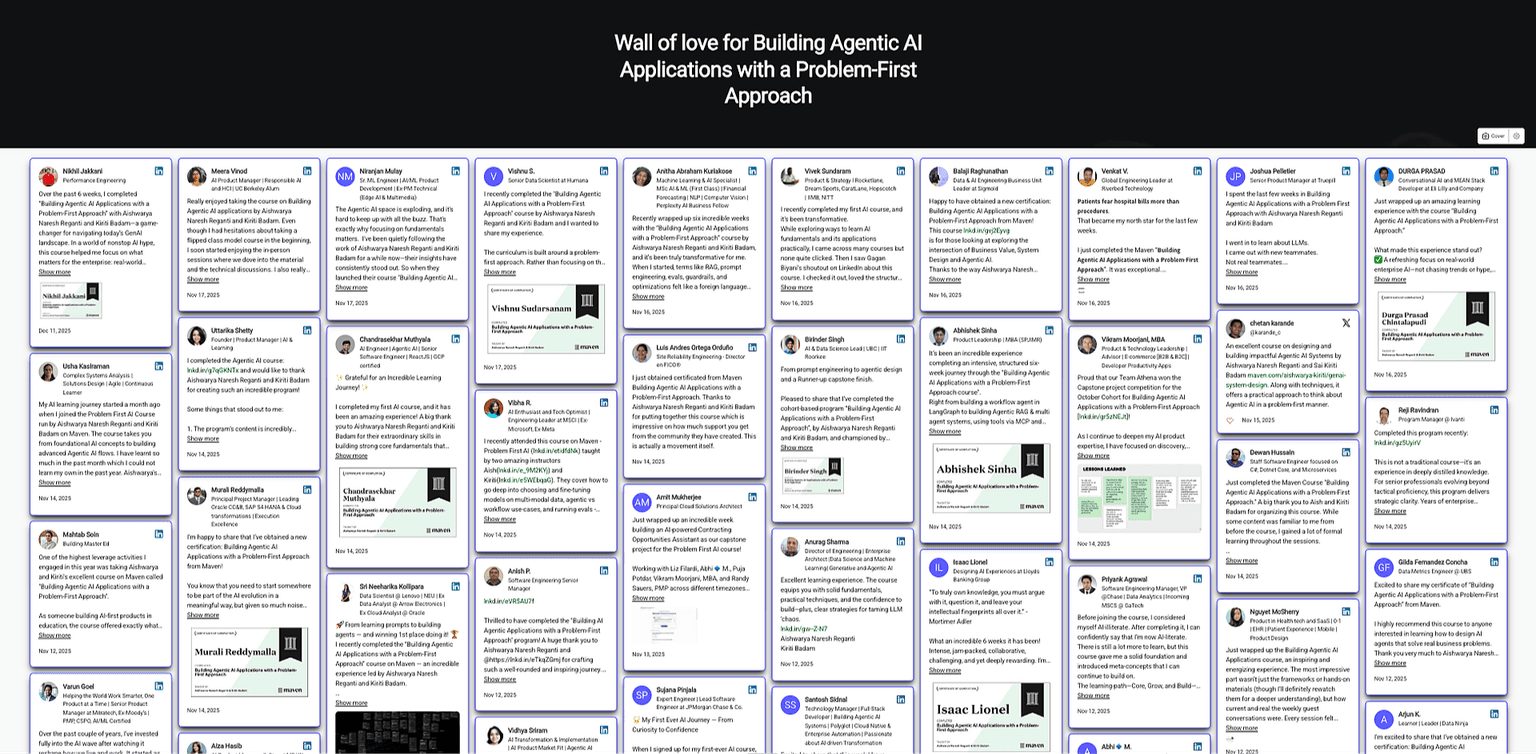

Wall of Love for our #1 Enterprise AI Course

Link: bit.ly/3MYmBOF

$2,500

USD

.png&w=384&q=75)

.png&w=384&q=75)