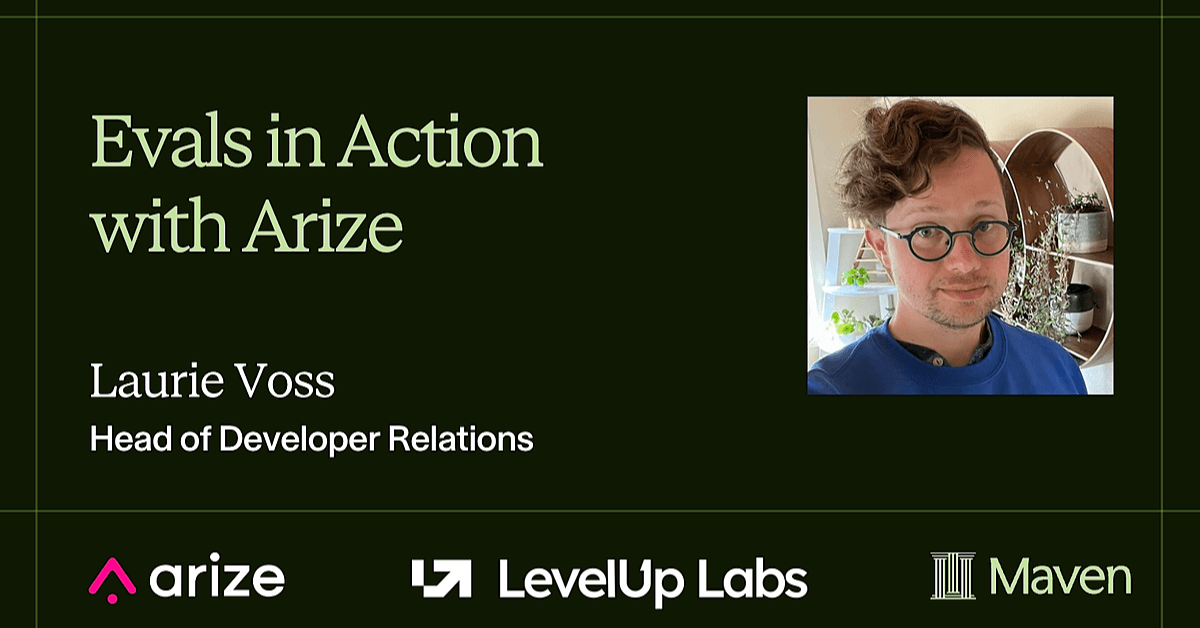

Evals in Action With Arize

Hosted by Laurie Voss

Fri, Feb 27, 2026

5:00 PM UTC (45 minutes)

Virtual (Zoom)

Free to join

Go deeper with a course

Fri, Feb 27, 2026

5:00 PM UTC (45 minutes)

Virtual (Zoom)

Free to join

Go deeper with a course

What you'll learn

Build your first LLM-as-a-Judge evaluator

Trace your AI system end-to-end

Choose the right evaluator for each failure mode

Why this topic matters

You'll learn from

Laurie Voss

Head of DevRel at Arize, co-founder, npm Inc

Laurie Voss is Head of Developer Relations at Arize AI, where he helps teams build better AI applications through observability and evaluation. Previously, he was VP of Developer Relations at LlamaIndex, Senior Data Analyst at Netlify, and co-founded npm, Inc (acquired by GitHub), where he served as COO and CTO. With 20+ years in developer tools and data analysis, Laurie brings a practical, code-first approach to AI evaluation.