AI Systems Under Pressure: Red-Team Before You Ship

Hosted by Krystal Jackson

In this video

What you'll learn

Define the Real AI Problem

See How AI Systems Break Under Pressure

Test Assumptions Before You Ship

Why this topic matters

You'll learn from

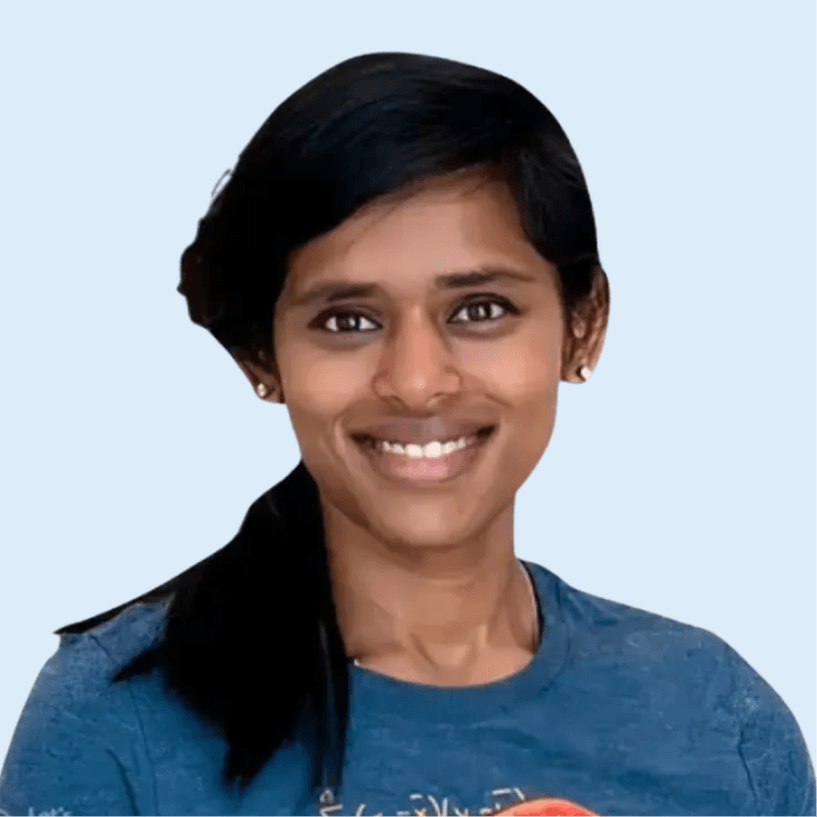

Krystal Jackson

AI Standards Development Researcher

Krystal Jackson is an AI security researcher focused on the global security implications of artificial intelligence. She is a Non-Resident Research Fellow with the Center for Long-Term Cybersecurity’s AI Security Initiative, where she studies how advanced AI systems shape risk, resilience, and security outcomes across borders.

Previously, Krystal was a Research Associate at the Frontier Model Forum, where she worked with industry leaders to advance AI-cyber safety and AI security. She also served as an AI Capabilities Analyst at the Cybersecurity and Infrastructure Security Agency (CISA), driving key AI initiatives within the Infrastructure Security Division. Krystal holds an M.S. in Information Security Policy and Management from Carnegie Mellon University.

Career highlights

- Non-Resident Research Fellow, Center for Long-Term Cybersecurity (AI Security Initiative)

- Research Associate, Frontier Model Forum, advancing AI-cyber safety with industry leaders

- AI Capabilities Analyst, CISA, leading AI initiatives in the Infrastructure Security Division

- Work featured at RSA Conference, Stanford Trust and Safety Research Conference, INFORMS, and AAAI

- Research roles with the Center for AI and Digital Policy Research Clinic, CSET, and the U.S. Census Bureau

- M.S., Information Security Policy and Management, Carnegie Mellon University

Go deeper with a course

Keep exploring

.png&w=1536&q=75)