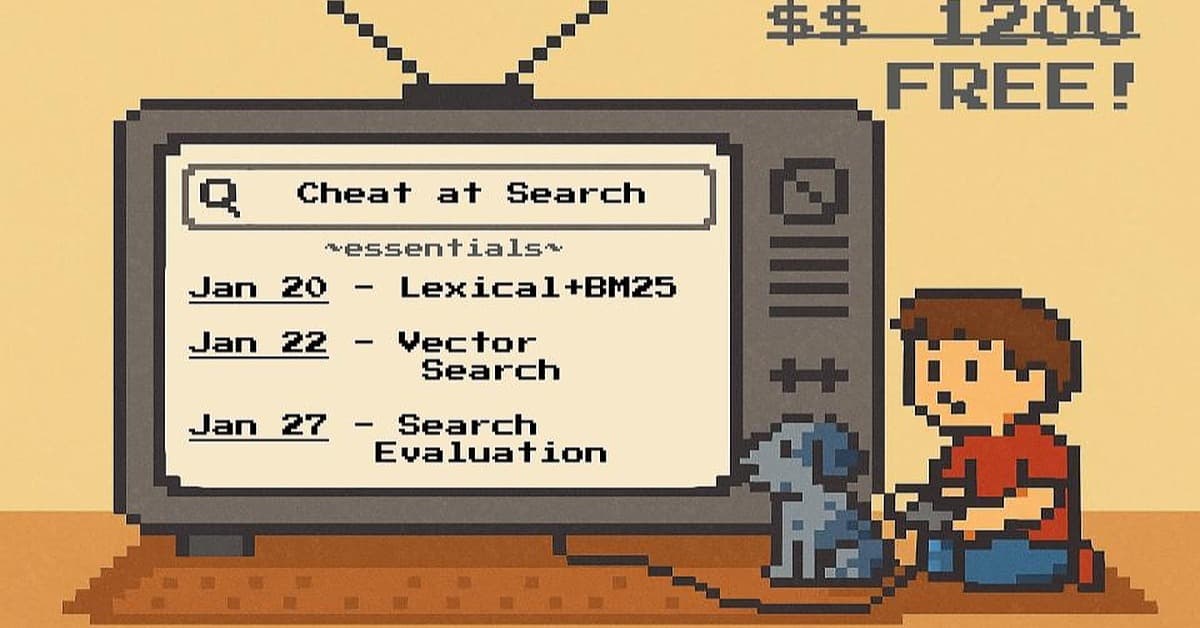

Cheat at Search Essentials: Evaluation, NDCG, and pals

Hosted by Doug Turnbull

In this video

What you'll learn

Basics of search relevance evaluation

Where practice diverges from theory

Pros / cons of different types of labeled relevance data

Why this topic matters

You'll learn from

Doug Turnbull

Ex-Reddit, Ex-Shopify. Author: AI Powered Search+Relevant Search

Doug leads search teams past the BS to find real opportunity in emerging search technologies. He’s enthusiastic about the evolving landscape, while staying mindful of the gap between marketing and reality. Good search strategy separates promising opportunities from dangerous sand traps. Doug helps teams find a clear, practical path forward.

He led machine-learning-driven search at Reddit and Shopify, served as CTO of OpenSource Connections, and co-authored Relevant Search and AI Powered Search.

Doug has trained and advised teams at the Wikimedia Foundation, Wayfair, and AWS, and created Quepid, SearchArray, and the Elasticsearch Learning to Rank plugin.

Go deeper with a course

Keep exploring

.jpg&w=1536&q=75)