Hamel Husain

ML Engineer with 20 years of experience

Shreya Shankar

ML Systems & Applied AI Evals Researcher

This course is popular

18 people enrolled last week.

Stop guessing if your AI works. Build the feedback loops that make it better.

🚨 Enroll now to get immediate access to our Discord community & notes so you can start learning right away. 🚨

All students get:

♾️ Unlimited access to future cohorts & office hours: never worry about timing or missing new material.

🗄️ Lifetime access to all materials!

🤖 6 months of unlimited access to our new AI Eval Assistant (more info below).

🧑🏫 10+ hours of office hours to maximize the value of live interaction.

🏫 A Discord community with continuous access to instructors to get unstuck (even after the course!).

---

Do you catch yourself asking any of the following questions while building AI applications?

1. How do I test applications when the outputs require subjective judgements?

2. If I change the prompt, how do I know I'm not breaking something else?

3. Where should I focus my engineering efforts? Do I need to test everything?

4. What if I have no data or customers, where do I start?

5. What metrics should I track? What tools should I use?

6. Can I automate testing and evaluation? If so, how do I trust it?

If so, this course is for you.

This is a flipped classroom setting. All lectures are professionally recorded with an emphasis on live office hours and student interaction.

What you’ll learn

Learn proven approaches for quickly improving AI applications. Build AI that works better than the competition, regardless of the use-case.

Understand instrumentation and observability for tracking system behavior.

Learn approaches for generating synthetic data to maximize error discovery and bootstrap product development.

Understand how to choose the right tools and vendors for you, with deep dives into the most popular solutions in the evals space.

Apply data analysis techniques to rapidly find systematic issues in your product regardless of the use case.

Master the processes and tools to annotate and analyze data quickly and efficiently.

Learn how to analyze agentic systems (tool calls, RAG, etc.) to quickly identify systematic patterns and errors.

Create evals that are customized to your product and provide immediate value, NOT generic off the shelf evals (which do not work).

Align evals with stakeholders & domain experts that allow you to scientifically trust the evals.

Create high-quality LLM-as-a-judge and code based evals with a systematic, iterative process.

Learn how to measure & debug RAG systems for retrieval relevance and factual accuracy.

Understand how to tame multi-step pipelines to identify error propagation and root-causes of errors quickly.

Master techniques that apply to multi-modal settings, including text, image, and audio interactions.

Learn how to set up automated evaluation gates in CI/CD pipelines.

Understand methods for consistent comparison across experiments, including how to prepare and maintain datasets to prevent overfitting.

Implement safety and quality control guardrails.

Develop a strong intuition of when to write an eval, and when NOT write an eval.

Learn how to design interfaces to remove friction from reviewing data and collect higher quality data with less effort.

Learn how to avoid common pitfalls surrounding team organization, collaboration, responsibilities, tools, automation, and metrics.

Who this course is for

Engineers and PMs who ship prompt changes and hope nothing breaks. (You'll learn to measure impact before and after every change.)

Teams still spot-checking AI outputs by hand instead of measuring systematically. (You'll learn how build automated evals you can trust.)

Leaders who don't know where their AI is failing or where to invest resources. You'll learn how to systematically find & prioritize issues.

What's included

Live sessions

Learn directly from Hamel Husain & Shreya Shankar in a real-time, interactive format.

Lifetime Access to All Recordings & Materials

Revisit the materials and lectures anytime. Recordings and slides are made available to all students.

150+ Page Course Reader

We provide a course reader with detailed notes to supplement your learning and act as a future reference as you work on evals.

Lifetime Access To Discord Community

Private discord for questions, job leads, and ongoing support from the community (over 1000+ students and growing).

10+ Office Hour Q&As

Open office hours for questions and personalized feedback.

4 Homework Assignments With Solutions & Walkthroughs

Optional coding assignments & walkthrough videos so you can practice every concept.

Certificate of Completion

Share your new skills with your employer or on LinkedIn.

Detailed Vendor & Tools Workshops

Curated talks from industry experts working on evals, as well as workshops with vendors building eval tools.

Maven Guarantee

This course is backed by the Maven Guarantee. Students are eligible for a full refund up until the halfway point of the course.

Course syllabus

10 live sessions • 77 lessons

Week 1

Mar

19

Optional: Live Office Hours 1

Thu 3/193:30 PM—4:30 PM (UTC)OptionalMar

20

Optional: Live Office Hours 2

Fri 3/203:30 PM—4:30 PM (UTC)Optional

Start Here

Lesson 1: Fundamentals & Lifecycle of Application-Centric Evals

Lesson 2 & 3: Systematic Error Analysis

Office Hours

FAQ and Links

Free resources

Schedule

Live sessions

2-3 hrs / week

Lectures are professionally recorded & edited to save you time and cut out the fluff. We maximize live interaction through office hours and workshops.

Thu, Mar 19

3:30 PM—4:30 PM (UTC)

Fri, Mar 20

3:30 PM—4:30 PM (UTC)

Tue, Mar 24

3:30 PM—4:30 PM (UTC)

Optional Homework Assignments

1-2 hrs / week

Optional coding homework assignments where you implement evals from scratch. We provide all students with solutions and associated walk-throughs.

Testimonials

- Hamel has provided exactly the tutorial I was needing for [evals], with a really thorough example case-study ... Hamel's content is fantastic, but it's a bit absurd that he's single-handedly having to make up for a lack of good materials about this topic across the rest of our industry!

Simon Willison

Creator of Datasette - Hamel and is one of most knowledgeable people about LLM evals. I've witnessed him improve AI products first-hand by guiding his clients carefully through the process. We've even made many improvements to LangSmith because of his work.

Harrison Chase

CEO, Langchain - Shreya and Hamel are legit. Through their work on dozens of use cases, they've encountered and successfully addressed many of the common challenges in LLM evals. Every time I seek their advice, I come away with greater clarity and insight on how to solve my eval challenges.

Eugene Yan

Senior Applied Scientist - Hamel and Shreya technically goated, deeply experienced engineers of AI systems who just so happen to have impeccable vibes. I wouldn't learn this material from anyone else.

Charles Frye

Dev Advocate - Modal - When I have questions about the intersection of data and production AI systems, Shreya & Hamel are the first people I call. It's often the case that they've already written about my problem. You can’t find more qualified folks to teach this; anywhere.

Bryan Bischof

Director of Engineering, Hex - I was seeking help with LLM evaluation and testing for our products. Hamel's widely-referenced work on evals made him the clear choice. He helped us rethink our entire approach to LLM development and testing, creating a clear pathway to measure and improve our AI systems.

George Siemens

CEO, Matter & Space

Our primer on Evals with Lenny Rachitsky

https://www.youtube.com/watch?v=BsWxPI9UM4c

See what our students have to say

See more testimonials at https://bit.ly/eval-reviews

More Testimonials

See more reviews at bit.ly/eval-reviews

Can PMs also get value from this course? Yes! This is what PMs are saying:

.png&w=1536&q=75)

https://x.com/ttorres/status/1933296711658815722

Frequently asked questions

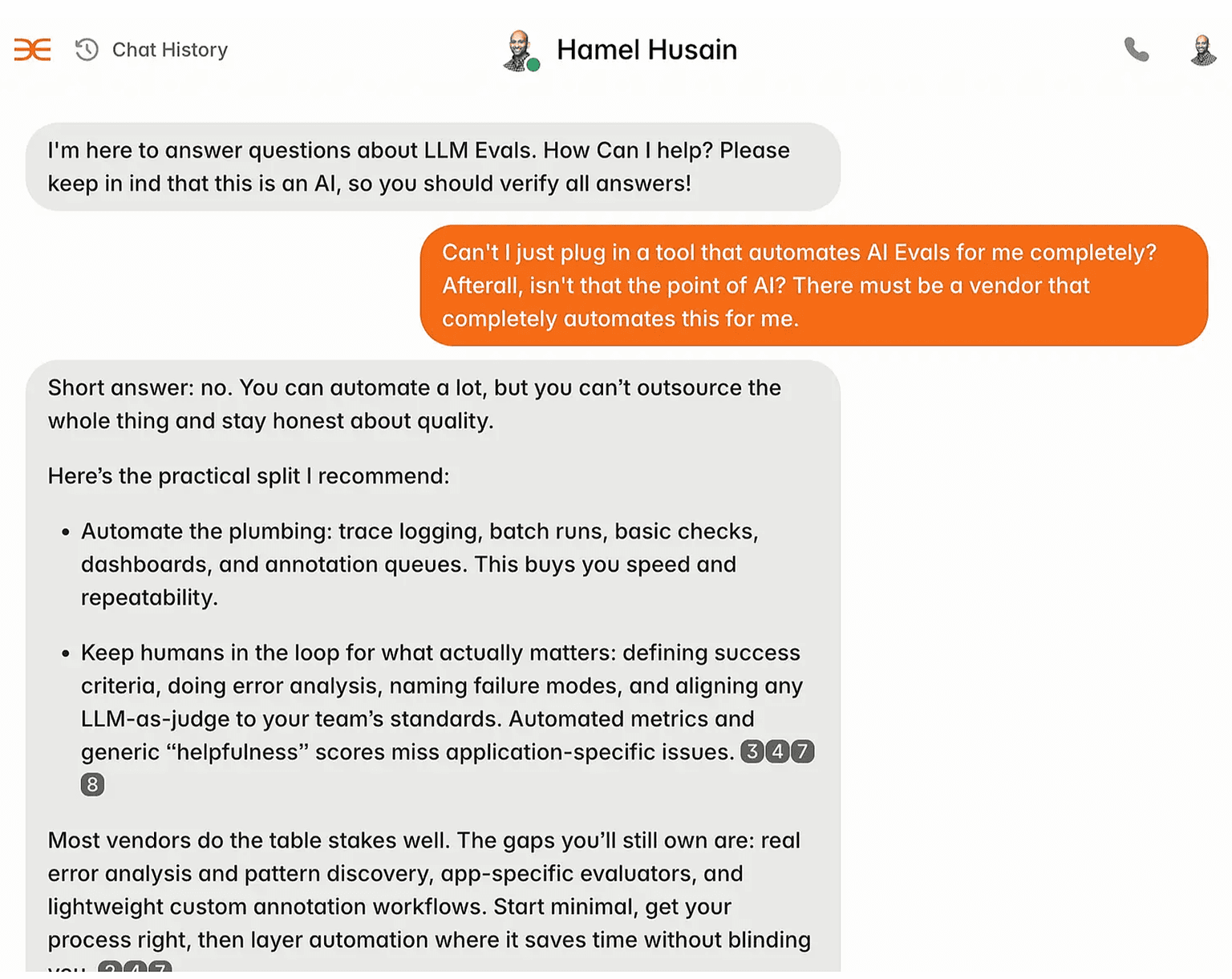

6 Months Access To An AI Evals Assistant w/Everything We've Said re: Evals

This is a special tool for students and not available for sale. Experimental and for learning only.

$5,000

USD