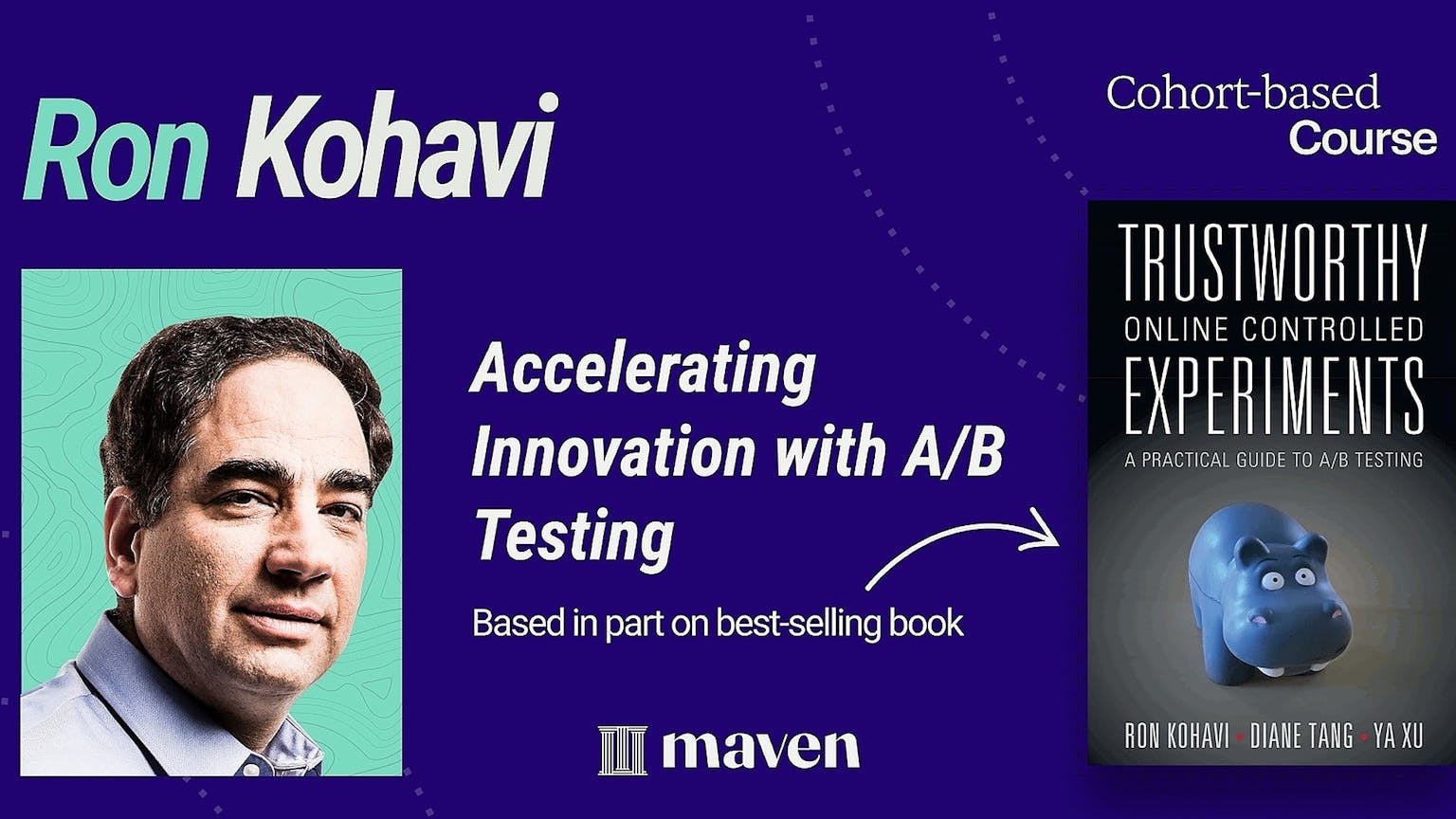

Accelerating Innovation with AB Testing

2 Weeks

·Cohort-based Course

Learn from a world-leading expert how to design and analyze trustworthy A/B tests to evaluate ideas, integrate AI/ML, and grow your business

Accelerating Innovation with AB Testing

2 Weeks

·Cohort-based Course

Learn from a world-leading expert how to design and analyze trustworthy A/B tests to evaluate ideas, integrate AI/ML, and grow your business

Previously at

Course overview

Empower your organization to be data-driven and innovative

Through multiple real examples of well-run experiments and real stories at Microsoft, Amazon, and Airbnb, you will see the humbling reality that we are terrible at assessing the values of ideas.

Trivial changes can be surprisingly useful, whereas large efforts often fail. Accelerate innovation by building Minimum Viable Products and Features (MVPs) and make the organization evidence-based and humbler, as it adopts and learns to use evidence from the gold standard in science: the controlled experiment.

You will understand the challenges in designing and running trustworthy controlled experiments, or A/B tests, including the importance of the Overall Evaluation Criterion (OEC), scaling, pitfalls, and Twyman's law.

The 2nd week covers additional topics, some more technical, including cultural challenges, institutional memory, maturity model, observational causal studies, offline evaluations, AI/Machine learning and triggering, scaling, build vs. buy, challenges, and requested topics.

The advanced topics course is a follow-on to this: https://bit.ly/AdvancedABFS

Who is this class for?

01

Data science managers and scientists will be able to design and interpret the experiment results in a trustworthy manner

02

Program managers focused on growth, revenue, conversions, and prioritization will understand how to provide the org with robust clear metric

03

Engineering leaders will be able to make the organizations more data-driven and efficient with fewer severe incidents through A/B tests

What you will learn from this 10-hour course

Understand and internalize the humbling reality that we are poor at assessing the values of ideas: most ideas fail!

You will hear multiple real memorable stories and examples, many that don't make it to books or articles. These were chosen from over 20 years of experimentation. You'll have the data to show that the poor success rate is documented across multiple organizations; expected it!

Understand the key advantages and limitations of A/B testing

Understand key concepts like causality, hierarchy of evidence, and key organizational tenets required for effective experimentation.

Learn how to design metrics and the Overall Evaluation Criterion

Designing metrics is hard. There is a hierarchy of metrics and perverse incentives. The most important metrics comprise of the OEC - The Overall Evaluation Criterion. We will look at good and bad examples.

Learn how to designing trustworthy A/B tests

Getting numbers is easy; getting numbers you can trust is hard. You'll understand common pitfalls and how to design reliable and trustworthy tests.

Learn about the cultural challenges

Learn about the cultural challenges, the humbling results (most ideas fail, pivoting, iterating, learning), institutional memory, ideation, prioritization, experimentation platforms

Learn about the relationship to AI and Machine Learning Modeling

When building AI or machine learning models, using A/B testing and triggering to evaluate the models that were built offline based on historical data

Learn about complementary quasi-experimental techniques

When you can't run an A/B test, quasi-experimentation methods and the risks of observational causal studies

Learn about existing challenges

What are key challenges and open questions in the field

Learn about YOUR topic of interest

If there is something specific you want to cover, there is time allocated for topics voted by the audience to discuss

Technical to your needs

The course focuses on developing the intuition and common misunderstandings, without the details of the statistics, which you can find in many books. We cover p-values, statistical power, and triggering.

You can go as technical as you want in the Q&A and community discussions

What’s included

Live sessions

Learn directly from Dr. Ronny Kohavi in a real-time, interactive format.

View recordings

Go back to course content and recordings for at least 6 months (no plan to remove access)

Community of peers

Stay accountable and share insights with like-minded professionals. Join the LinkedIn alumni group

Certificate of completion

Share your new skills with your employer or on LinkedIn.

Course syllabus

6 live sessions • 6 lessons

Week 1

Sep

8

Session 1: 2 hours + optional 1/2-hour bonus & Q&A

Sep

9

Session 2: 2 hours and optional 1/2-hour bonus & Q&A

Sep

11

Session 3: 2 hours + optional 1/2-hour bonus & Q&A

Session 1: Introduction, interesting examples, organizational tenets

Session 2 - End-to-end example, metrics, and the OEC

Session 3: Statistics, E2E ex 2, Twyman's law, Ideas, prioritization

Week 2

Sep

15

Session 4: 2 hours and optional 1/2-hour bonus & Q&A

Sep

18

Session 5: 2 hours + optional 1/2-hour bonus & Q&A

Session 4: Cultural challenges, maturity, observational causal studies, pitfalls

Session 5: AI/ML, triggering, leakage/interference, scaling, requested topics

Post-course

Sep

30

Optional: Alumni event for Accelerating innovation with A/B Testing

Optional: Alumni event for Accelerating innovation with A/B Testing

What students are saying

Testimonials: What People on Sphere wrote (the platform where most cohorts were taught before Maven)

Dylan Lewis

Ryan Lucht

Pavan Gangisetty

Han Dong

Scott Theisen

Sharath Bulusu

James Niehaus

Ishan Goel

Jakub Linowski

Deborah O'Malley

Aaro Wroblewski

Jialin Huang

Scott Rome

Aaron Gasperi

Jessica Porges

Haiyan Chen

Emma Ding

Manuel de Francisco Vera

Markus Wiggering

Sorin Tarna

Gabriel Rodriguez

100% Customer Satisfaction Guarantee

Not satisfied after attending the first session and before session 2 starts? Get a full refund

Companies with two or more people that took the course

Top: Companies sorted by approximate market cap. A/B Vendors: sorted by alphabetical order

Your instructor

Dr. Ronny Kohavi

Executive at Microsoft, Airbnb, Amazon, author of best-selling A/B testing book

Ronny Kohavi was an executive at Amazon, Microsoft, and Airbnb and has over 20 years of experience running A/B tests and leading experimentation teams. He loves to teach, and his papers have over 65,000 citations. He co-authored the best-selling book: Trustworthy Online Controlled Experiments: A Practical Guide to A/B Testing (with Diane Tang and Ya Xu), which is a top-10 data mining book on Amazon. He is the most viewed writer on Quora's A/B testing and received the Individual Lifetime Achievement Award for Experimentation Culture in Sept 2020.

Ronny holds a PhD in Machine Learning from Stanford University.

Course schedule

10-12.5 hours total (2.5 hours optional)

Week 1: Mon, Tue, Thu

8-10AM Pacific Time + 1/2-hour bonus/day

Three x 2-hour sessions + 1/2-hour bonus in week 1 on Monday, Tuesday, and Thursday.

The bonus time is optional. Material may be more technical, and time for additional Q&A

Week 2: Mon, Thu

8-10AM Pacific Time + 1/2-hour bonus/day

Two x 2-hour sessions + 1/2-hour bonus in week 2 on Monday and Thursday

Optional Q&A

15 minutes after each session

Learning is better with cohorts

Real-world examples

We will review multiple real A/B tests

Deep dive design and analysis of two A/B tests

We will deep dive into the full lifecycle of designing an A/B test to answer a hypothesis and analyze the results

Learn with a cohort of peers

Join a community of like-minded people who want to learn and grow alongside you

Requested topics

Missing anything? We have allocated time to suggest topics, collect votes, and discuss them on the last session

Join an upcoming cohort

Accelerating Innovation with AB Testing

8 Sept 2025

$1,999

Dates

Payment Deadline

Frequently Asked Questions

Quick Introduction to A/B Testing

The pros and cons of A/B testing

Necessary ingredients

Humbling statistics

Twyman's Law

Questions? Add and vote at

Get the free recording

The ultimate guide to A/B testing: Podcast with Lenny Rachitsky

The podcast has been viewed over 50,000 times.

Get it by entering your email

Chapter 1 of Trustworthy Online Controlled Experiments: a Practical Guide to A/B Testing

Full text of Chapter 1 and table of contents for this best-selling book by the course instructor.

Get it by entering your email

Stay in the loop

Sign up to be the first to know about course updates.