Advanced Prompt Engineering for LLMs

4 Days

·Cohort-based Course

Use advanced prompting techniques and tools to improve the capabilities, performance, and reliability of LLM-powered applications

Advanced Prompt Engineering for LLMs

4 Days

·Cohort-based Course

Use advanced prompting techniques and tools to improve the capabilities, performance, and reliability of LLM-powered applications

TRUSTED BY

Course overview

Effectively prompting and building with LLMs

OVERVIEW OF THE COURSE

LLMs (Large Language Models) show powerful capabilities, but not knowing how to effectively and efficiently use them often leads to reliability and performance issues. Prompt engineering helps to improve discover capabilities, improve reliability, reduce failure cases, and save on computing costs when building with LLMs.

This is a hands-on, technical course that teaches how to effectively build with LLMs. It covers the latest prompting techniques (e.g., fe-shot, chain-of-thought, RAG, prompt chaining) that you can apply to a variety of complex use cases such as building personalized chatbots, LLM-powered agents, prompt injection detectors, LLM-powered evaluators, and much more.

Topics include:

• Taxonomy of Prompting Techniques

• Tactics to Improve Reliability

• Structuring Inputs and LLM Outputs

• Zero-shot/Few-shot/Many-Shot Prompting

• Chain of Thought Prompting

• Self-Reflection & Self-Consistency

• Meta Prompting & Automatic Prompt Engineering

• ReAcT Prompting Framework

• Retrieval Augmented Generation (RAG)

• Fine-Tuning LLMs

• Function Calling & Tool Usage

• LLM-Powered Agents (Agentic Workflows)

• LLM Evaluation & Judge LLMs

• AI Safety & Moderation Tools

• Adversarial Prompting (Jailbreaking and Prompt Injections)

• Common Real-World Use Cases of LLMs

• Overview of LLM Tools

... and much more

PREREQUISITES

• This is a technical course and you need to be knowledgeable in Python to take this course.

• Basic knowledge of LLMs is beneficial but not required.

If you don't have experience using Python, we recommend our beginner's course: https://maven.com/dair-ai/llms-for-everyone

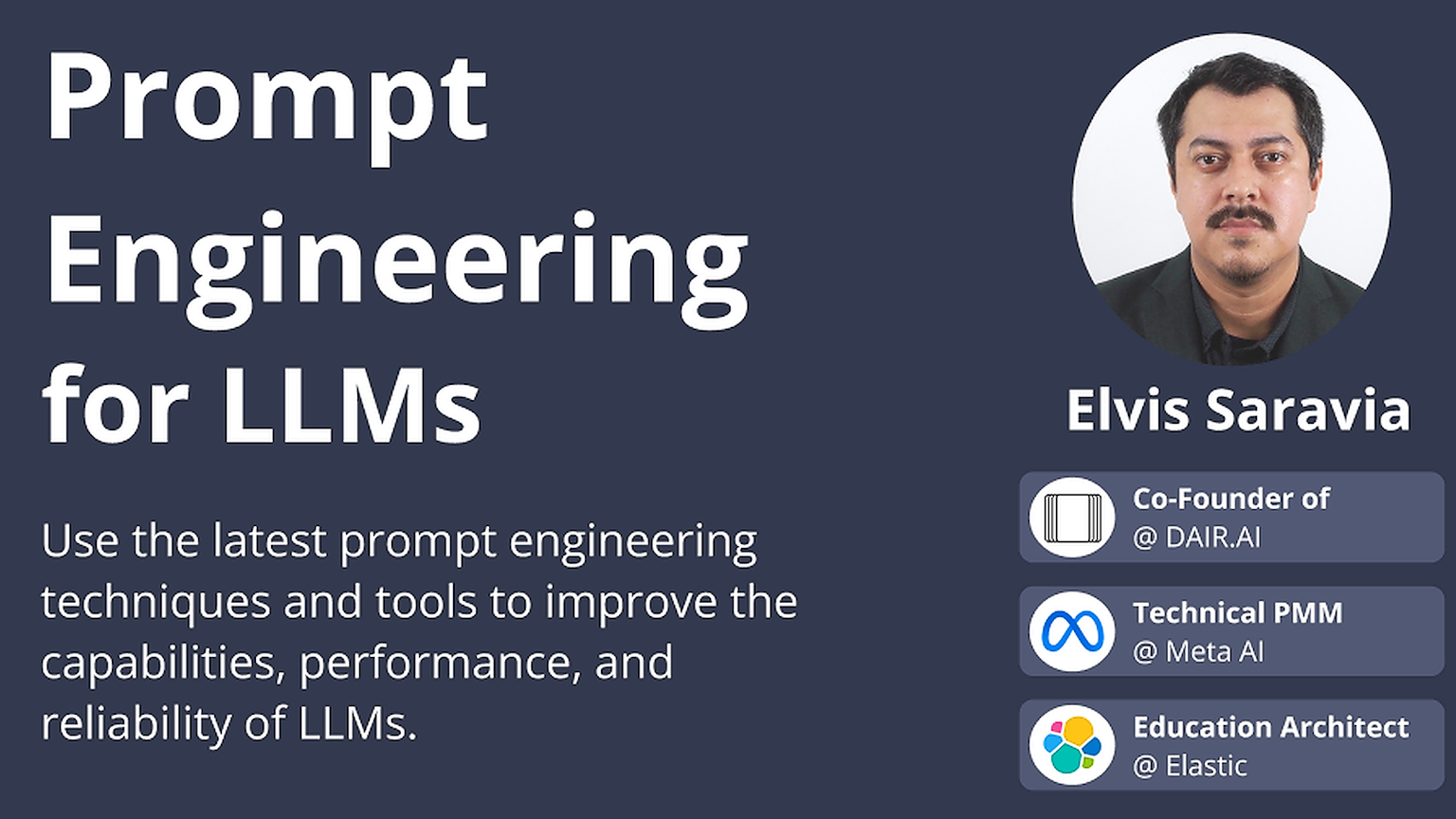

ABOUT THE INSTRUCTOR

Elvis, the instructor for this course, has vast experience doing research and building with LLMs and Generative AI. He is a co-creator of the Galactica LLM and author of the popular Prompt Engineering Guide. He has worked with world-class AI teams like Papers with Code, PyTorch, FAIR, Meta AI, Elastic, and many other AI startups.

Reach out to training@dair.ai for any questions, corporate trainings, and group/student discounts.

WHO THE COURSE HAS HELPED

This course has helped AI startups freelancers, and professionals at companies like Apple, Microsoft, Google, LinkedIn, Amazon, Coinbase, Asana, Airbnb, Intuit, JPMorgan Chase & Co, and many others.

Who is this course for

01

Developers building applications on top of LLMs

02

Builders wanting to improve LLM reliability, efficiency, and performance for their LLM-powered applications.

03

Professionals interested in leveling up on how to better use and apply LLMs.

What you’ll get out of this course

Design and optimize prompts

- Learn key elements and tactics for designing effective prompts

- Design, test, and optimize prompts to improve model performance and reliability for different tasks such as text summarization and information extraction

Build a robust framework to effectively apply advanced prompt engineering techniques

- Review and apply the latest and most advanced prompt engineering techniques (few-shot learning, chain-of-thought, RAG, prompt chaining, self-consistency, self-verification, etc.)

- Build with approaches like ReAct, RAG, function calling, and LLM-powered agents

Develop use cases and build applications

- Develop use cases such as tagging systems, personalized chatbots, evaluation systems, product review analyzers, and more

- Build advanced applications that involve combining knowledge with conversational assistants and using LLMs with external tools and knowledge

Perform evaluations for your applications

- Design a robust framework for evaluating and measuring the quality, diversity, safety, and robustness of LLMs

- Compare prompt engineering, RAG, and fine-tuning

- Cover safety topics like prompt injection and moderation tools

Learn prompt engineering tools

- Review the latest prompt engineering and LLM tools such as ChatGPT, Llama Index, Comet, LangChain, Flowise, Scale AI's Spellbook, and many more

- Discuss current trends, papers, and future directions in prompt engineering

What’s included

Live sessions

Learn directly from Elvis Saravia in a real-time, interactive format.

Lifetime access

Go back to course content and recordings whenever you need to.

Community of peers

Stay accountable and share insights with like-minded professionals.

Certificate of completion

Share your new skills with your employer or on LinkedIn.

Maven Guarantee

This course is backed by the Maven Guarantee. Students are eligible for a full refund up until the halfway point of the course.

Course syllabus

6 live sessions • 4 lessons • 4 projects

Week 1

Oct

21

Prompt Engineering for LLMs - Session 1

Oct

22

Prompt Engineering for LLMs - Session 2

Oct

22

Optional: Prompt Engineering for LLMs - Office Hour 1

Oct

23

Prompt Engineering for LLMs - Session 3

Oct

23

Optional: Prompt Engineering for LLMs - Office Hour 2

Oct

24

Prompt Engineering for LLMs - Session 4

Session 1 - Structuring Effective Prompts

Session 2 - Advanced Prompting & Improving LLM Reliability

Session 3 - LLM Evaluation

Session 4 - RAG, Agentic Workflows & LLM Tools

What students are saying

Meet your instructor

Elvis Saravia

Elvis Saravia

Elvis is a co-founder of DAIR.AI, where he leads all AI research, education, and engineering efforts. His primary interests are training and evaluating large language models and developing applications on top of them. He is the co-creator of the Galactica LLM and was a technical product marketing manager at Meta AI where he supported and advised world-class teams like FAIR, PyTorch, and Papers with Code. Prior to this, he was an education architect at Elastic where he developed technical curriculum and courses.

Be the first to know about upcoming cohorts

Advanced Prompt Engineering for LLMs

What people are saying

Yevgeniy S. Meyer, Ph.D.

Miguel Won

Yashwanth (Sai) Reddy

Lawrence Wu

Course schedule

4 Day Intensive

Live Sessions

October 21-24

4 live sessions (includes lectures, demos, exercises, and projects)

Live Office Hours

1 hour

Optional office hours to ask questions and receive guidance related to the course topics

1-on-1 Sessions

30 mins

Book a free 1-on-1 session with the instructor to further discuss careers, products, use cases, or anything related to building with LLMs.

Bonus content

2 hours per week

Includes additional readings and self-paced tutorials + bonus exercises to practice prompt engineering techniques and tools for different use cases and applications

Learning is better with cohorts

Active hands-on learning

This course builds on live workshops and hands-on projects

Interactive and project-based

You’ll be interacting with other learners through breakout rooms and project teams

Learn with a cohort of peers

Join a community of like-minded people who want to learn and grow alongside you

Frequently Asked Questions

Be the first to know about upcoming cohorts